A Triple Learner Based Energy Efficient Scheduling for Multi-UAV Assisted Mobile Edge Computing

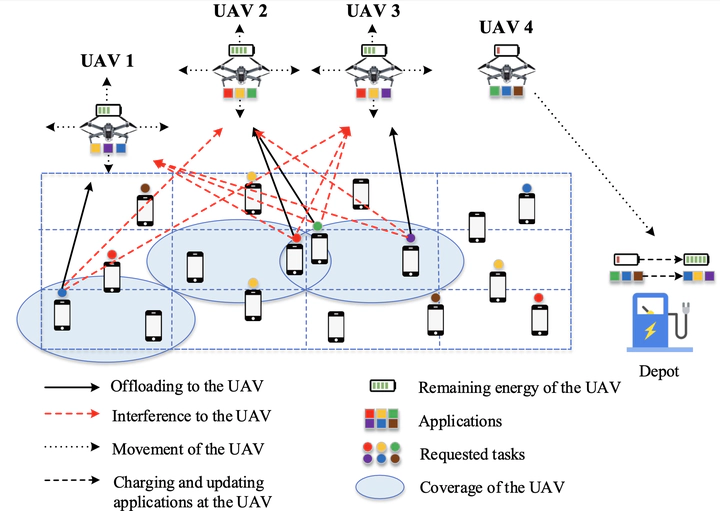

An illustration of considered multi-UAV assisted MEC.

An illustration of considered multi-UAV assisted MEC.

Abstract

In this paper, an energy efficient scheduling problem for multiple unmanned aerial vehicle (UAV) assisted mobile edge computing is studied. In the considered model, UAVs act as mobile edge servers to provide computing services to end-users with task offloading requests. Unlike existing works, we allow UAVs to determine not only their trajectories but also decisions of whether returning to the depot for replenishing energies and updating application placements (due to limited batteries and storage capacities). Aiming to maximize the long-term energy efficiency of all UAVs, i.e., total amount of offloaded tasks computed by all UAVs over their total energy consumption, a joint optimization of UAVs’ trajectory planning, energy renewal and application placement is formulated. Taking into account the underlying cooperation and competition among intelligent UAVs, we reformulate such problem as three coupled multi-agent stochastic games, and then propose a novel triple learner based reinforcement learning approach, integrating a trajectory learner, an energy learner and an application learner, for reaching equilibriums. Simulations evaluate the performance of the proposed solution, and demonstrate its superiority over counterparts.