Differentially Private Federated Multi-Task Learning Framework for Enhancing Human-to-Virtual Connectivity in Human Digital Twin

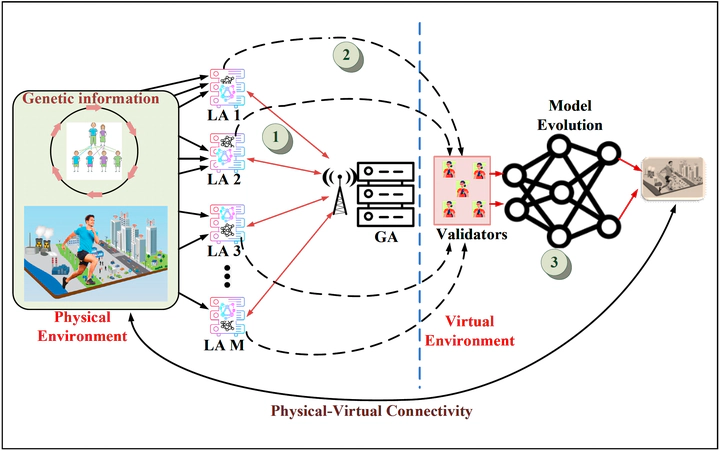

The proposed secure differentially private federated multitask learning (DPFML) framework for HDT.

The proposed secure differentially private federated multitask learning (DPFML) framework for HDT.

Abstract

Ensuring reliable update and evolution of a virtual twin in human digital twin (HDT) systems depends on any connectivity scheme implemented between such a virtual twin and its physical counterpart. The adopted connectivity scheme must consider HDT-specific requirements including privacy, security, accuracy and the overall connectivity cost. This paper presents a new, secure, privacy-preserving and efficient humanto- virtual twin connectivity scheme for HDT by integrating three key techniques: differential privacy, federated multi-task learning and blockchain. Specifically, we adopt federated multitask learning, a personalized learning method capable of providing higher accuracy, to capture the impact of heterogeneous environments. Next, we propose a new validation process based on the quality of trained models during the federated multi-task learning process to guarantee accurate and authorized model evolution in the virtual environment. The proposed framework accelerates the learning process without sacrificing accuracy, privacy and communication costs which, we believe, are nonnegotiable requirements of HDT networks. Finally, we compare the proposed connectivity scheme with related solutions and show that the proposed scheme can enhance security, privacy and accuracy while reducing the overall connectivity cost.